Introduction

Voice cloning and real time voice changers represent a paradigm shift in how audio is created, modified, and experienced. No longer reliant on traditional recording methods or costly voice actors, individuals and companies can now synthesize ultra realistic voices or transform their own voices into entirely different ones with minimal data. This transformation has opened up new frontiers in entertainment, accessibility, communication, and fraud prevention while also raising new ethical, legal, and technological challenges.

This in depth guide focuses on the macro context of voice cloning and voice changers. It explores the foundational elements of the technology, practical implementation steps, ethical considerations, and how to structure content semantically to build topical authority.

Defining the Core Concepts

What Is Voice Cloning?

Voice cloning is the process of creating a synthetic but realistic version of a specific individual voice. Using a short audio sample of a person speech, AI models can reproduce their vocal tone, cadence, accent, and emotional expressions. The result is speech that sounds indistinguishable from the original speaker.

What Is an AI Voice Changer?

A voice changer, particularly those powered by artificial intelligence, is a system that modifies an individual’s voice in real time or through post processing. This includes changing pitch, gender, accent, and even applying celebrity or character voices for entertainment or anonymity.

Key Differences

- Voice Cloning creates a new synthetic voice modeled on a real person.

- Voice Changers manipulate existing speech, usually for fun, disguise, or accessibility.

Both are built on similar technological foundations but have different use cases and applications.

Essential Entities and Attributes in Voice Cloning Systems

To understand voice cloning semantically, we must identify the key entities and their attributes. These are the core components that every voice cloning system interacts with:

- Voice Signal: Attributes: waveform, sample rate, duration, amplitude

- Speaker Identity: Attributes: timbre, pitch range, prosody, vocal tract characteristics

- Embedding Vector: A numerical representation of a speaker’s vocal identity used by neural networks to reproduce voice characteristics

- Mel Spectrogram: A time frequency representation of audio, commonly used as an intermediate step in synthetic speech generation

- Vocoder: A model or algorithm that converts spectrograms into raw audio waveforms

- Style Token and Emotional Profile: Encodes emotional characteristics such as joy, anger, or sadness for more expressive synthesis

- Text Input: Required in text to speech systems to guide what content is spoken by the cloned voice

- Audio Fingerprinting and Watermark: Embedded signature in synthetic voices to verify authenticity or trace origin

- Data Corpus: The dataset used to train or fine tune models, consisting of diverse speech samples

- Inference Pipeline: The final runtime model that receives input text or audio and produces the cloned voice

Each entity contributes to the overall performance, accuracy, and realism of a cloned voice system.

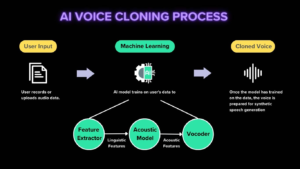

Step by Step Breakdown of the Voice Cloning Process

Voice Data Collection

Voice cloning starts with data. This is typically a short but diverse audio sample of the target speaker. Ideally, this data should include different emotions, speaking speeds, intonations, and phonetic diversity. A high quality microphone, minimal background noise, and acoustic treatment are essential.

Key considerations:

- Sample rate: 44.1 kHz or 48 kHz recommended

- Recording environment: soundproof or at least quiet

- Content: phonetically balanced script or spontaneous speech

Preprocessing and Feature Extraction

Raw audio is cleaned to remove noise, normalized, and segmented. Then features are extracted usually mel spectrograms and pitch contours. These features are easier for neural networks to learn from than raw waveforms.

Steps include:

- Silence trimming

- Amplitude normalization

- Spectrogram generation

- Optional: MFCC extraction for more detailed phonetic features

Speaker Embedding Creation

A speaker encoder model converts the cleaned audio sample into a fixed length vector. This vector captures unique speaker attributes such as pitch, resonance, and articulation style. It is used as the identity condition during synthesis.

Benefits of this step:

- Reduces need for large datasets

- Enables transfer of voice characteristics to new text or audio

Speech Generation TTS or VC

There are two primary approaches:

Text to-Speech TTS

Text is converted into synthetic speech using the speaker embedding. This approach requires a well aligned training dataset audio and text pairs and is ideal for audiobook narration, branding, or accessibility solutions.

Voice Conversion VC

Input audio from any speaker is transformed into the target speaker voice using their embedding. This is used for real time applications, such as streamers changing their voices during broadcasts.

Vocoder Processing

After generating a spectrogram, it must be converted into a waveform. This is the job of the vocoder. High-quality vocoders are crucial for achieving natural sounding output.

Examples include:

- Autoregressive vocoders: high quality, but slower

- GAN based vocoders: faster and more expressive

- Non neural vocoders: used in older systems, limited fidelity

Postprocessing

Final adjustments are made to the audio:

- Denoising

- Audio smoothing

- Breath insertion

- Optional: watermark or fingerprint insertion

This ensures the final output sounds as human and authentic as possible.

Applications and Use Cases

Voice cloning technology is already widely used across industries. Some notable applications include:

- Audiobook Narration: Clone a consistent, professional voice to read entire books.

- Accessibility: People with degenerative diseases can preserve their voices for future use.

- Entertainment: Create character voices for animation, games, and video content.

- Customer Support: Use branded AI voices in interactive voice response IVR systems.

- Language Dubbing: Replicate actor voices across multiple languages.

- Virtual Assistants: Humanize responses from chatbots or digital agents.

Real time voice changers are also used in:

- Streaming: Alter voices live to match characters or preserve privacy.

- Gaming: Role play with dynamic voice changes.

- Social media content: Add effects and variety to short form videos.

Ethical and Legal Considerations

Consent

Cloning a person voice without explicit permission is ethically wrong and legally risky. Individuals must consent to having their voice cloned, and any use should align with their expectations.

Misuse and Deepfakes

Voice cloning can be used maliciously to impersonate others, scam family members, or bypass voice authentication systems. This raises serious concerns in banking, government, and legal contexts.

Copyright and Ownership

Voices, especially those of public figures, may be protected by likeness laws and intellectual property regulations. Companies must ensure they have licensing rights before cloning a voice for commercial use.

Detection and Watermarking

To mitigate misuse, some systems include detectable audio fingerprints. These help distinguish real from synthetic audio in forensic or legal scenarios.

How to Optimize Voice Cloning Content for Semantic SEO

Following a semantic SEO structure enhances discoverability, credibility, and topical authority. Here is how to implement it:

Use One Macro Context per URL

The main focus should remain clear: this page is about voice cloning and voice changers. Subtopics such as vocoders, style transfer, and emotion synthesis can be explored in separate cluster articles.

Implement Topical Clusters

Create interconnected articles covering:

- Data collection for voice cloning

- Speaker embedding methods

- Real time vs offline synthesis

- Prosody and emotional control

- Legal implications and consent frameworks

Each page should link back to the core pillar and to other semantically related articles.

Answer Specific User Intent

Anticipate and respond to user questions with clear, structured answers. Use headings like:

- Can I clone my own voice?

- How long does it take to generate a synthetic voice?

- Is it legal to clone celebrity voices?

These responses help earn featured snippets and satisfy user needs quickly.

Use Structured Data

Schema markup should be applied to categorize content properly:

FAQ Pagefor question answer sectionsHow Tofor process based guidesCreative Workfor voice demo pages

This improves crawlability and increases the chance of enhanced search results.

Challenges in the Field

Despite major advances, voice cloning still faces several challenges:

- Data scarcity: Some languages or demographics are underrepresented in training data.

- Accent preservation: Cloned voices may lose original accent nuances.

- Emotion synthesis: Expressive speech is hard to model consistently.

- Real-time constraints: Achieving low latency synthesis on mobile devices is still evolving.

- Bias and fairness: Models may favor common speaker types for example American English, male voices.

Ongoing research is addressing these issues, with solutions emerging through larger datasets, better transfer learning, and multi lingual training.

Future Trends in Voice Cloning

Looking ahead, voice cloning is poised to become even more versatile and accessible. Key trends include:

- Zero shot Cloning: Cloning voices from a few seconds of audio without retraining models.

- Cross lingual Voice Cloning: Translating and synthesizing voice in languages the speaker never used.

- Expressive Control: Finer control over tone, pacing, and inflection.

- On-device Processing: Mobile compatible, real time voice changers for consumer apps.

- Biometric-resistant Design: Preventing voiceprint spoofing in secure systems.

- Voice Privacy Tools: Anti cloning tools to scramble voice patterns or detect unauthorized clones.

These innovations will define the next decade of voice technology.

What is AI voice cloning?

AI voice cloning is the process of creating a synthetic version of a person voice using short audio samples, allowing text or speech to be reproduced in that voice.

How does a voice changer differ from voice cloning?

Voice changers modify an existing voice in real time, while voice cloning creates a new synthetic voice modeled on a specific speaker.

How much audio is needed to clone a voice?

Most systems require 1 to 5 minutes of clean audio, though advanced models can clone voices with just a few seconds.

Can a cloned voice speak other languages?

Yes, cross lingual voice cloning allows a synthetic voice to speak languages the original speaker may not know.

Is it legal to clone someone voice?

Only if you have explicit consent. Using someone voice without permission can violate privacy and likeness rights.

What are the risks of voice cloning?

Risks include identity fraud, deepfake scams, impersonation, and breaches of voice-based security systems.

Can synthetic voices be detected?

Yes, through watermarking or forensic analysis, though high quality fakes are becoming harder to detect.

What is a vocoder in voice cloning?

A vocoder converts a spectrogram visual audio representation into an actual sound waveform for playback.

Is it possible to clone a voice in real time?

Yes, real time voice changers with speaker conversion can alter voices instantly during live communication.

What are common uses of voice cloning?

Popular use cases include audiobooks, virtual assistants, film dubbing, gaming characters, and assistive speech devices.

Conclusion

AI voice cloning and voice changers have matured into transformative tools across industries. From virtual avatars to accessible communication, the ability to synthesize and modify voices has reshaped how we interact with digital systems. Yet with power comes responsibility. Ethical implementation, strong legal frameworks, and transparent disclosure must guide the use of this technology.

As the field evolves, businesses, creators, and technologists who understand the semantic structure, technical components, and practical applications of voice cloning will be best positioned to innovate and to lead.